Research Participation

To participate in ongoing linguistic research in the department, visit the sign up page to learn more about the qualifications and sign up!

Speech Perception

The speech perception research in the lab centers on questions of language sound change and synchronic phonological structure. Speech Perception research is supported by a two-room suite, where participants are tested in small sound isolated booths. In addition, we have an eye tracking lab for the psycholinguistic study on-line word and sentence processing.

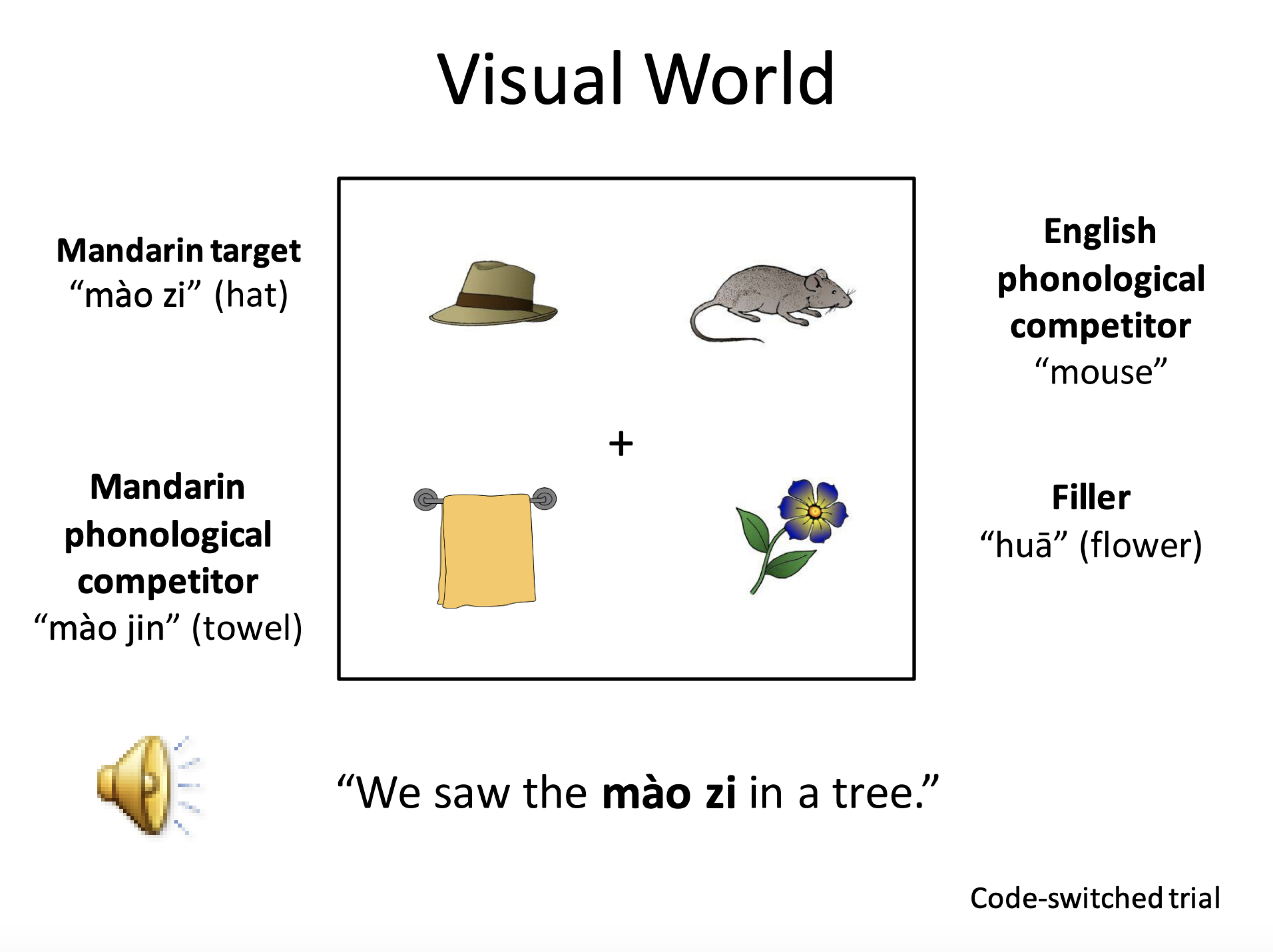

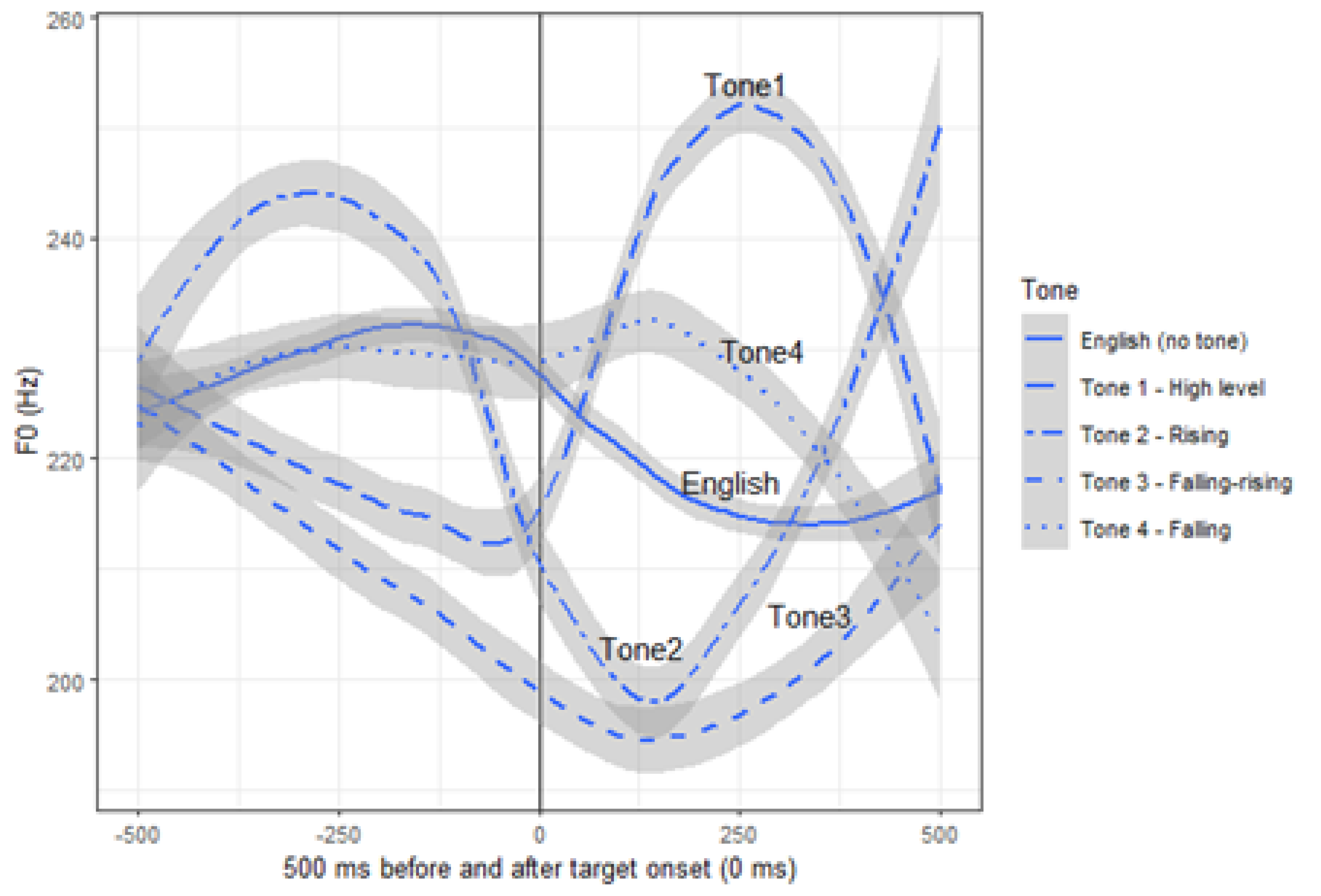

In recent years, research on speech perception in the PhonLab has centered on cognitive mechanisms that are involved in using context in processing speech - in talker ‘normalization’ (Sjerps & Johnson, 2018; Johnson, 2018), in language effects in code-switching (Shen, 2017); sound symbolism (Shibata, 2018); semantic predictability (Manker, 2016); intonation perception (Dil, 2018), and in sound change in progress (Johnson & Song, 2016).

Dil, Sofea. (2018). Effects of Learning Strategies on Perception of L2 Intonation Patterns. UC Berkeley PhonLab Annual Report, 14. Retrieved from https://escholarship.org/uc/item/4j77s391

Johnson, Keith. (2018). Vocal Tract Length Normalization. UC Berkeley PhonLab Annual Report, 14. Retrieved from https://escholarship.org/uc/item/16c753jz

Johnson, Keith, & Sjerps, Matthias. (2018). Speaker Normalization in Speech Perception. UC Berkeley PhonLab Annual Report, 14. Retrieved from https://escholarship.org/uc/item/2fc6x1ph

Johnson, Keith, & Song, Yidan. (2016). Gradient phonemic contrast in Nanjing Mandarin. UC Berkeley PhonLab Annual Report, 12. Retrieved from https://escholarship.org/uc/item/6fk2m1sw

Manker, Jonathan (2016). Context, Predictability and Phonetic Attention. UC Berkeley PhonLab Annual Report, 12. Retrieved from https://escholarship.org/uc/item/0xd4v943

Shen, Alice (2017). Costs and Cues to Code-switched Lexical Access. UC Berkeley PhonLab Annual Report, 13. Retrieved from https://escholarship.org/uc/item/0cs6c0ws

Shibata, Andrew (2018). The Influence of Dialect in Sound Symbolic Size Perception. UC Berkeley PhonLab Annual Report, 14. Retrieved from https://escholarship.org/uc/item/5s32c04v

Articulatory Phonetics

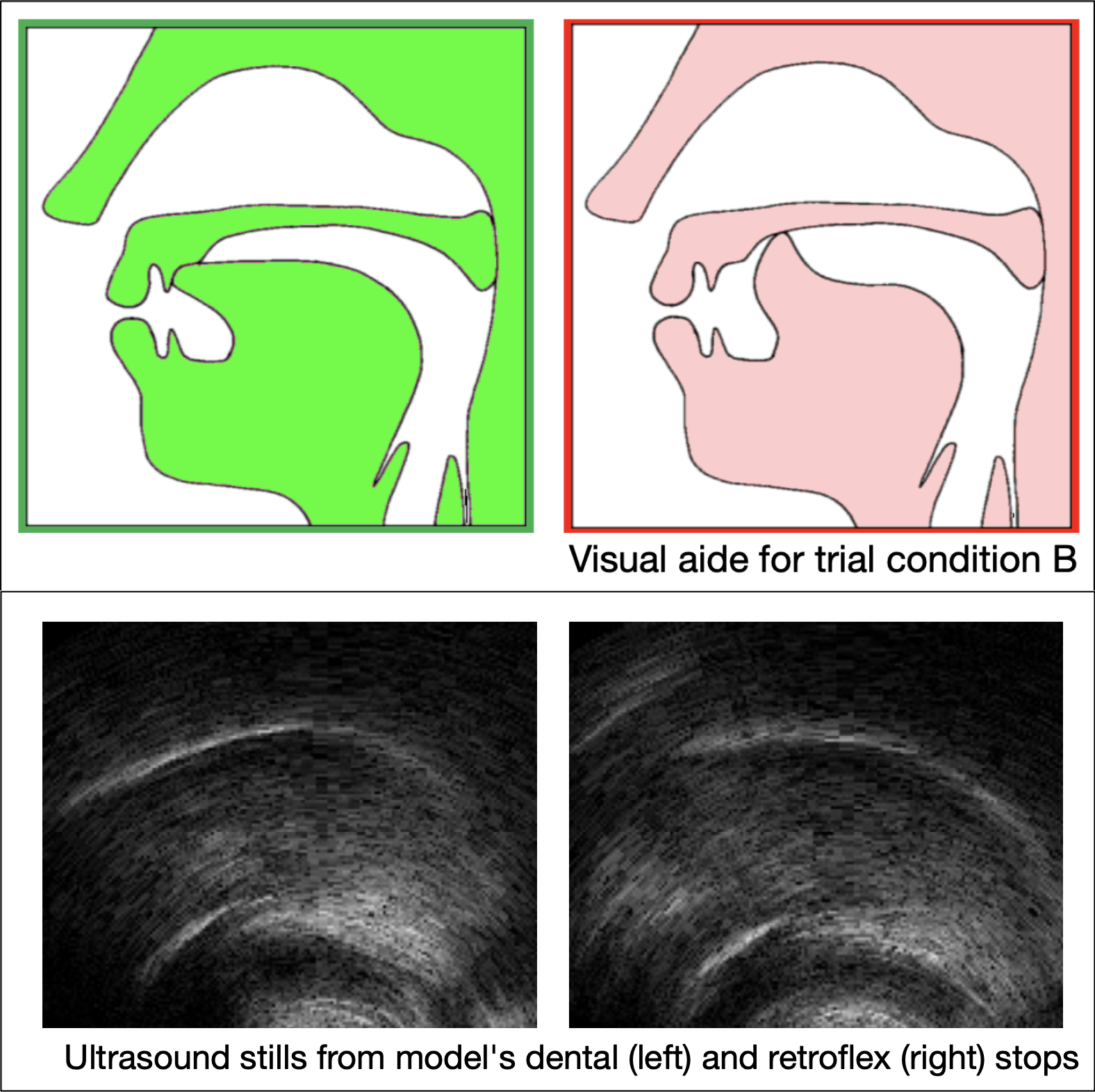

Speech production studies in the lab focus on the human system of articulatory control in English and other languages, and in ordinary speech as well as in response to experimental manipulations. Facilities for speech production research are housed in a 500 square foot research lab with a large double-walled sound-attenuated recording booth. The lab also houses equipment for articulatory research using ultrasound, Electromagnetic Articulography (EMA), aerodynamics, static palatography, electro-palatography (EPG), and electro-glottography (EGG). Access to corpora of acoustic speech production and speech articulation such as the X-Ray Microbeam Database also facilitate PhonLab research in this area.

Recent research includes work on gestural magnitude and timing, extra-linguistic variation (e.g. individual anatomical variation), and the effects of articulatory training. Work on gestural timing focuses on the production of gestural timing in complex segments, investigating the coordination of multiple articulations within and between segments. Research investigating the interaction between linguistic and extra-linguistic variation may lead us to a better understanding of sound change. Other projects include work on articulatory training, asking people to alter their articulations, in various experimental paradigms to study the limits of speech motor control in adults, and the cross-linguistic tuning of speech motor control regimes.

Bakst, Sarah & Keith Johnson. 2018. Modeling the effect of palate shape on the articulatory-acoustics mapping, JASA Express Letters EL71

Bakst, Sarah & Susan Lin. 2015. An ultrasound investigation into articulatory variation into American /s/ and /r/, In The Scottish Consortium for ICPhS 2015, editor, Proceedings of the 18th International Congress of Phonetic Sciences, Glasgow, UK. the University of Glasgow.

Cheng, Anrew, Emily Remirez, and Susan Lin. 2017. An Ultrasound Investigation of Covert Articulation in Rapid Speech. Presented at the Workshop on Dynamic Modeling in Phonetics and Phonology, Chicago Linguistics Society 23, University of Chicago

Faytak, Matthew. 2017. Articulatory reuse in good-enough speech production strategies. ASA/EAA joint meeting, Boston.

Faytak, Matthew. & Keith Johnson. 2016. Evaluating a new measure of fricative source intensity. LabPhon 15, Cornell.

Faytak, Matthew & Lin, Susan. 2015. Articulatory variability and fricative noise in apical vowels, In The Scottish Consortium for ICPhS 2015, editor, Proceedings of the 18th International Congress of Phonetic Sciences, Glasgow, UK. the University of Glasgow.

Kang, Shinae, Keith Johnson, & Gregory Finley. (2016) Effects of native language on compensation for coarticulation, Speech Communication 77, 84-100.

Lapierre, Myriam & Susan Lin. (2019). Cues to Panãra Nasal-Oral Stop Sequence Perception. Proceedings of the 2019 Congress of International Phonetic Sciences (ICPhS 2019). Melbourne, Australia.

Lapierre, Myriam & Susan Lin. (2018). Patterns of nasal coarticulation in Panará. Poster presented at the 16th Conference on Laboratory Phonology (LabPhon16), Lisbon, Portugal.

Lin, Susan; Meg Cychosz, Alice Shen, and Emily Cibelli. 2019. The effects of phonetic training and visual feedback on novel contrast production. In Sasha Calhoun, Paola Escudero, Marija Tabain & Paul Warren (eds.) Proceedings of the 19th International Congress of Phonetic Sciences, Melbourne, Australia.

Phonology

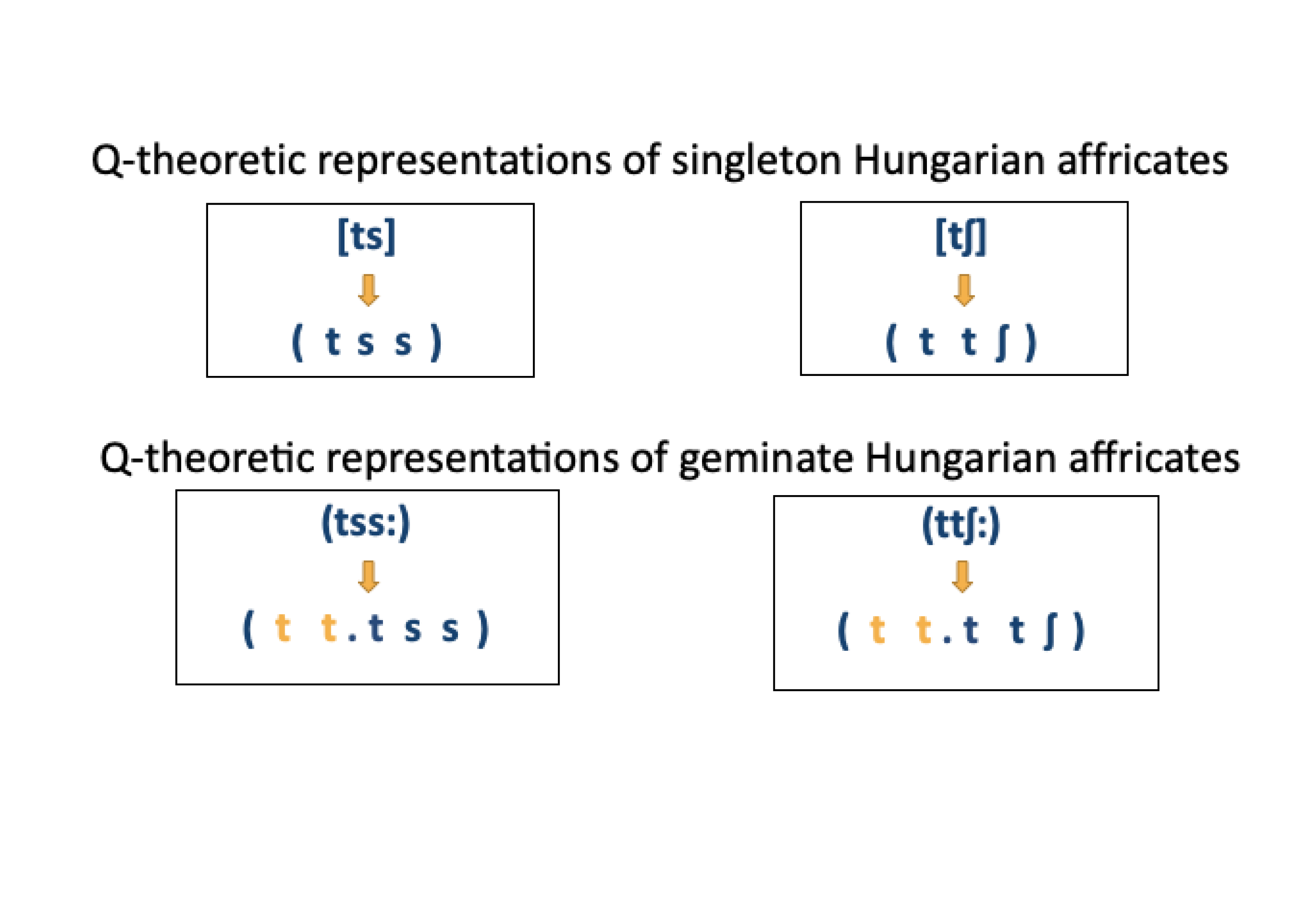

In recent years, there has been considerable work at Berkeley on the analysis, history, and typology of segmental, subsegmental, and prosodic phonology, with special attention to their interfaces with phonetics, morphology, and syntax. This has led to the development of specific theories (subsegmental Q-theory, Optional Construction Morphology) and numerous single and joint publications between faculty, students, and visitors.

Akumbu, Pius & Larry M. Hyman. 2017. Nasals and low tone in Grassfields noun class prefixes. Nordic Journal of African Studies 26.1-13.

Caballero, Gabriela & Sharon Inkelas. 2018. A construction-based approach to multiple exponence: In Geert Booij (ed.), The construction of words, 111-139. Springer.

Faytak, Matthew. 2017. Sonority in some languages of the Cameroon Grassfields. In Martin J. Ball & Nicole Müller (eds), Challenging sonority, 76-96. Equinox.

Garvin, Karee, Myriam Lapierre, and Sharon Inkelas. 2018. A Q-theoretic approach to distinctive subsegmental timing. Proceedings of the 2018 Annual Meeting of the Linguistic Society of America.

Hyman, Larry M. & William R. Leben. Word prosody II: Tone systems. To appear in Carlos Gussenhoven & Aoju Chen (eds), Handbook of Prosody. Oxford University Press.

Hyman, Larry M. 2017. On reconstructing tone in Proto-Niger-Congo. In Valentin Vydrin & Anastasia Lyakhovich (eds), In the hot yellow Africa, 175-191. St. Petersburg: Nestor-Istoria.

Hyman, Larry M. 2017. Underlying representations and Bantu segmental phonology. In Geoffrey Lindsey & Andrew Nevins (eds), Sonic signatures. 101-116. Benjamins.

Hyman, Larry M. 2018. “Towards a typology of tone changes”. In Haruo Kubozono & Mikio Giriko (eds), Tonal Change and Neutralization, 7-26. De Gruyter Mouton.

Hyman, Larry M. 2018. The autosegmental approach to tone in Lusoga. In Diane Brentari & Jackson Lee (eds), Shaping Phonology, 47-69. University of Chicago Press.

Hyman, Larry M. 2018. Towards a typology of postlexical tonal neutralizations. In Haruo Kubozono (ed.), Tonal Change and Neutralization, 221-240. De Gruyter Mouton.

Hyman, Larry M. 2018. What is phonological typology?. In Larry M. Hyman & Frans Plank (eds), Phonological typology, 1-20. De Gruyter Mouton.

Hyman, Larry M. 2018. What tone teaches us about language”. Language 94.698-709.

Hyman, Larry M. 2018. Why underlying representations?. Journal of Linguistics. Online version, February 2018. Vol. 54, pp. 591-610 (August 2018).

Hyman, Larry M. 2019. Lusoga noun phrase tonology. In Pius W. Akumbu, and Esther P. Chie (eds), Engagement with Africa: Linguistic essays in honor of Ngessimo M. Mutaka, 93-138. Köln: Rüdiger Köppe Verlag.

Hyman, Larry M. 2019. Positional prominence vs. word accent: Is there a difference?. In Rob Goedemans, Jeffrey Heinz, and Harry van der Hulst (eds), The study of word stress and accent: theories, methods and datam 60-75. Cambridge University Press.

Hyman, Larry M., Nicholas Role, Hannah Sande, Emily Chen, Peter Jenks, Florian Lionnet, John Merrill and Nico Baier. “Niger-Congo linguistic features and typology.” In Ekkehard Wolff (ed.), The Cambridge Handbook of African Linguistics & A History of African Linguistics, 191-245. Cambridge University Press.

Hyman, Larry M., Hannah Sande, Florian Lionnet, Nicholas Rolle, and Emily Clem. “Niger-Congo and adjacent areas”. To appear in Carlos Gussenhoven &anp; Aoju Chen (eds), Handbook of Prosody, Vol. IV, Prosodic systems. Oxford University Press.

Inkelas, Sharon. 2014. The interplay of morphology and phonology. Oxford: Oxford University Press.

Inkelas, Sharon & Eric Wilbanks. 2018. "Directionality effects via distance-based penalty scaling." Proceedings of the 2017 Annual Meeting on Phonology. DOI:http://dx.doi.org/10.3765/amp.v5i0.4256.

Inkelas, Sharon & Stephanie Shih. 2016. Tone melodies in the age of surface correspondence. Proceedings of the 51st Annual Meeting of the Chicago Linguistic Society.

Inkelas, Sharon & Stephanie Shih. 2016. Re-representing phonology: consequences of Q theory. Proceedings of NELS 46.

Iskarous, Khalil & Darya Kavitskaya. 2010. The interaction between contrast, prosody, and coarticulation in structuring phonetic variability. Journal of Phonetics 38: 625–639.

Iskarous, Khalil & Darya Kavitskaya. 2018. Sound change and the structure of synchronic variability: Phonetic and phonological factors in Slavic palatalization. Language 94: 1–41.

Kavitskaya, Darya. 2017. Some recent developments in Slavic phonology. Journal of Slavic Linguistics 25: 389–415.

Kavitskaya, Darya. 2017. Compensatory lengthening and structure preservation revisited yet again. In Claire Bowern, Laurence Horn and Raffaella Zanuttini, eds., On Looking into Words (and Beyond). Berlin: Language Science Press. 41–58.

Katsika, Argyro & Darya Kavitskaya. 2015. The phonetics of /r/ deletion in Samothraki Greek. Journal of Greek Linguistics 15: 34–65.

Kavitskaya, Darya. 2014. Segmental inventory and the evolution of harmony in Crimean Tatar. Turkic Languages 17: 86–114.

Kavitskaya, Darya, Maria Babyonyshev, Theodore Walls, and Elena Grigorenko. 2011. Investigating the effects of phonological memory and syllable complexity in Russian-speaking children with SLI. Journal of Child Language 38: 979–998.

Kavitskaya, Darya & Maria Babyonyshev. 2011. The role of syllable structure: The case of Russian-speaking children with SLI. In Chuck Cairns & Eric Raimy, eds. Handbook of the Syllable. Brill Publishers. 353–371.

Kavitskaya, Darya & Peter Staroverov. 2010. When an interaction is both opaque and transparent: the paradox of fed counterfeeding. Phonology 27: 1–34.

Kavitskaya, Darya. 2006. Perceptual salience and palatalization in Russian. In Louis Goldstein, D. H. Whalen, and C. T. Best, eds. Laboratory Phonology 8. Mouton de Gruyter, Berlin. 589–610.

Kavitskaya, Darya. 2001. Hittite vowel epenthesis and the sonority hierarchy. Diachronica 28: 267–299.

Lionnet, Florian. 2017. A theory of subfeatural representations: the case of rounding harmony in Laal. Phonology 34.523-564.

Lionnet, Florian & Larry M. Hyman. 2018. Phonology. In Tom Güldemann (ed.). The languages and linguistics of Africa. The World of Linguistics 11, 597-703. De Gruyter Mouton.

Merrill, John. 2018. The historical origin of consonant mutation in the Atlantic languages. Doctoral dissertation, U.C. Berkeley. 489pp.

Rolle, Nicholas, Matthew Faytak, and Florian Lionnet. 2017. The distribution of advanced tongue root harmony and interior vowels in the Macro-Sudan Belt. In Patrick Farrell (ed.), Proceedings of the Linguistic Society of America, vol. 2, 10:1-15.

Rolle, Nicholas & Larry M. Hyman. 2019. Phrase-level prosodic smothering in Makonde. In Supplemental Proceedings of the 2018 Annual Meeting on Phonology. Washington, DC: Linguistic Society of America.

Rolle, Nicholas, Florian Lionnet, and Matthew Faytak (in press). Areal patterns in the vowel systems of the Macro-Sudan Belt. Linguistic Typology.

Sande, Hannah. 2018. Cross-word morphologically conditioned scalar tone shift in Guébie. Morphology 28.253-295.

Sande, Hannah & Peter Jenks. 2018. Cophonologies by phrase. Proceedings of NELS 48, vol. 3, 39-53.

Shih, Stephanie, Jordan Ackerman, Noah Hermalin, Sharon Inkelas, and Darya Kavitskaya. 2018. Pokémonikers: A study of sound symbolism and Pokémon names. Proceedings of the 2018 Annual Meeting of the Linguistic Society of America.

Staroverov, Peter & Darya Kavitskaya. 2017. Tundra Nenets consonant sandhi as coalescence. The Linguistic Review. 34: 331–364.

Phonological Learning and Language Acquisition

Another significant area of research in the PhonLab concerns the study of phonological learning. Ongoing work in this area includes both the study of child speech acquisition as well as the development and comparison of theoretical and computational models of phonology and its interfaces with other grammatical components such as morphosyntax and phonetics, and language acquisition. Research on childhood development has focused on the development of speech coupled with the anatomical developments in childhood language learning as well as the acquisition of phonological structure such as complex segments, e.g. lateral /Cl/ clusters.

Research on models of phonology and phonological acquisition employs experimental methods, including nonce probe studies and artificial grammar learning experiments, to validate theoretical proposals and to deepen our understanding of language learning by children and the biases they carry. Recent research has focused on phonetic naturalness bias in phonological learning, as well as the acquisition of morpheme-specific phonology in Slovene and French.

Adler, Jeffrey & Jesse Zymet. Irreducible parallelism in phonology: evidence for lookahead from Mohawk, Maragoli, Lithuanian, and Sino-Japanese. Accepted with minor revisions to Natural Language and Linguistic Theory.

Byun, Tara McAllister, Sharon Inkelas, and Yvan Rose. 2016. "The A-map model: articulatory reliability in child-specific phonology." Language 92(1). 141–178.

Cychosz, Meg, Jan Edwards, Benjamin Munson, and Keith Johnson. 2019. Spectral and temporal measures of coarticulation in child speech: A validation study.

Cychosz, Meg. 2019. Holistic lexical storage: Coarticulatory evidence from child speech. To appear in Proceedings of the 19th International Congress of Phonetic Sciences. Melbourne, Australia.

Glewwe, Eleanor, Jesse Zymet, Jacob Adams, Rachel Jacobson, Anthony Yates, Ann Zeng, and Robert Daland. 2018. Substantive bias and final (de)voicing: an artificial grammar learning study. Talk presented at the 2018 Annual Meeting of the Linguistic Society of America, Salt Lake City, Utah. Presenting authors: Eleanor Glewwe and Jesse Zymet.

Lin, Susan & Katherine Demuth. 2015. Children’s acquisition of English onset and coda /l/: articulatory evidence, Journal of Speech, Language, and Hearing Research, 58:13–27.

Risdal, Megan, Ann Aly, Adam Chong, Patricia Keating, and Jesse Zymet. 2016. The relationship between sonority and glottal vibration. Talk presented by Megan Risdal at CUNY Phonology Forum on Sonority, University of Ithaca. Presenting author: Megan Risdal.

Zymet, Jesse. 2019. Learning a frequency-matching grammar together with lexical idiosyncrasy: MaxEnt versus mixed-effects logistic regression. In Katherine Hout, Anna Mai, Adam McCollum, Sharon Rose & Matthew Zaslansky (eds.), Proceedings of the 2018 Annual Meeting on Phonology. Washington, DC: Linguistic Society of America.

Zymet, Jesse. To appear. Malagasy OCP targets a single affix: implications for morphosyntactic generalization in learning. Linguistic Inquiry.

Phonetic Neuroscience

Collaborators for Phonetic Neuroscience research are Edward Chang (UCSF Department of Neurosurgery), John Houde and Sri Nagarajan (UCSF Speech Neuroscience Lab), Bob Knight (Cognitive Neuroscience Research Lab, UCB Psychology), and Marc Ettlinger (Martinez Veterans Affairs, Speech and Hearing Research Lab).

Publications from these collaborations have appeared in Brain and Language, Nature Neuroscience, Science, Nature, PLoS One, and ELife.

Bouchard , Kristofer E.; David F. Conant, Gopala K. Anumanchipalli, Benjamin Dichter, Kris S. Chaisanguanthum, Keith Johnson, and Edward F. Chang. 2016. High-Resolution, Non-Invasive Imaging of Upper Vocal Tract Articulators Compatible with Human Brain Recordings. PLOS ONE http://dx.doi.org/10.1371/journal.pone.0151327

Bouchard Kristofer E., Nima Mesgarani, Keith Johnson, and Edward F. Chang. 2013. Functional organization of human sensorimotor cortex for speech articulation. Nature 495(7441), 327-32.

Cibelli, Emily S.; Matthew K. Leonard, Keith Johnson, and Edward F. Chang. 2015. The influence of lexical statistics on temporal lobe cortical dynamics during spoken word listening. Brain and Language 147, 66-75.

Chartier, Josh; Gopala K. Anumanchipalli, Keith Johnson, and Edward F. Chang. 2018. Encoding of articulatory kinematic trajectories in human speech sensorimotor cortex, Neuron 98, 1042-1054.

Cheung, Connie; Liberty Hamilton, Keith Johnson, and Edward F. Chang. 2015. The auditory representation of speech sounds in human motor cortex. eLife 2016;5, e12577.

Mesgarani, Nima; Connie Cheung, Keith Johnson, and Edward F. Chang. 2014. Phonetic feature encoding in human superior temporal gyrus. Science 28;343(6174), 1006-10.

Causes and Consequences of Pronunciation Variation

Research in the lab dealing with pronunciation variation includes work on the acoustics of code-switching (Shen, Gahl, Johnson, 2018) and on the effects of phonological neighborhood density on lexical access and pronunciation.

Shen, Alice, Susanne Gahl, and Keith Johnson. 2018. "Effects of phonetic cues on processing Mandarin-English code switches in sentence comprehension". Poster presented at CUNY2018.

Gahl, Susanne, Yao Yao, and Keith Johnson. 2012. Why reduce? Phonological neighborhood density and phonetic reduction in spontaneous speech. Journal of Memory and Language 66, 789-806.

Susanne Gahl. 2015. Lexical competition in vowel articulation revisited: Vowel dispersion in the Easy/Hard database. Journal of Phonetics 49, 96-116.

Sociophonetics

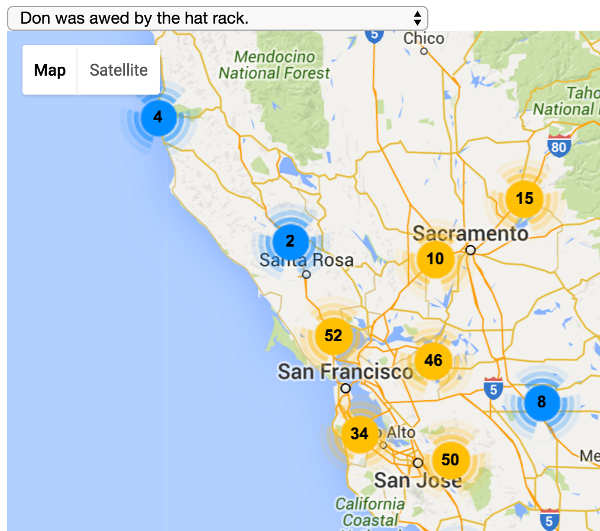

The sociolingusitics of phonetic identity (particularly in regional dialect and gender and sexual identity) and of phonetic accommodation is a focus interest and energy in the PhonLab. Research in sociophonetics is made possible by an audio video recording studio, and by the conversational language recording space SPARCL. As well as great web application programming support from Ronald Sprouse.

The SPARCL (Sociophonetic Area for Recording Conversational Language) is a resource for conducting research in the areas of sociolinguistics, sociophonetics, discourse analysis, and gesture analysis. It is designed for eliciting naturalistic speech data in a comfortable environment. The lab is equipped with video cameras and high quality audio equipment, in a living room style décor. Financial support for SPARCL was provided by the Department of Linguistics, the Department of Spanish and Portuguese (thanks Justin Davidson), and by a UC Berkeley "Student Technology Fund" award won by lab graduate students.

Recent research includes the "Voices of Berkeley" project which collected phonetic data from over 700 students at Berkeley using students' own computer and a web-interface. We have recently begun to publish results of this project and make plans for a larger, more representative sample.

Barron-Lutzross, Auburn. 2017. "Personas, personalities, and stereotypes of lesbian speech." Presented at the 46th Meeting of New Ways of Analyzing Variation.

Barron-Lutzross, Auburn, Andrew Cheng, Alice Shen, Eric Wilbanks, and Azin Mirzaagha. 2018. Do Personal and Interpersonal Power Influence Phonetic Accommodation? Presented at the Laboratory Phonology 16, Lisbon, Portugal.

Cheng, Andrew. 2019. Style-Shifting, Bilingualism, and the Koreatown Accent. Presented at the 93rd Annual Meeting of the Linguistic Society of America.

Cheng, Andrew. 2018. More than Pitch Perfect: A Longitudinal Acoustic Study of a Male-to-Female Transgender Video Blogger. Presented at the 92nd Annual Meeting of the Linguistic Society of America.

Johnson, Keith & Molly Babel. 2010. On the perceptual basis of distinctive features: Evidence from the perception of fricatives by Dutch and English speakers. Journal of Phonetics, 38 (1), 127-136.

Remirez, Emily. 2019. Perception of (mis)matching sociolinguistic cues in an episodic framework Poster at PHonetics and phonology Research weekEND (PHREND) 2019

Wilbanks, Eric. 2019. Modeling the influence of confidence in social cues during speech perception using Gaussian mixture models. International Congress of Phonetic Sciences. Melbourne, Australia.

Funding

The lab has recieved funding from the National Science Foundation, the National Institutes of Health, the Peder-Sather Center, the France-Berkeley Fund, and the Holbrook Fund.

Graduate student research has been supported by the National Science Foundation, the UC Berkeley Graduate Division, the UC Berkeley Council of Graduate Students, the Abigail Hodgen Fund for Women in the Social Sciences, Oswalt Endangered Language Grants, and of course, the Department of Linguistics.